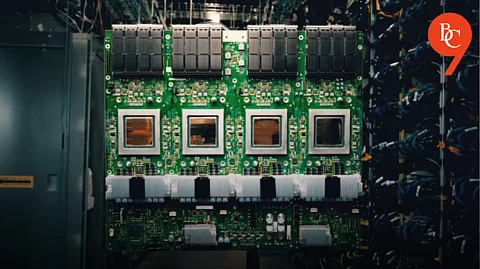

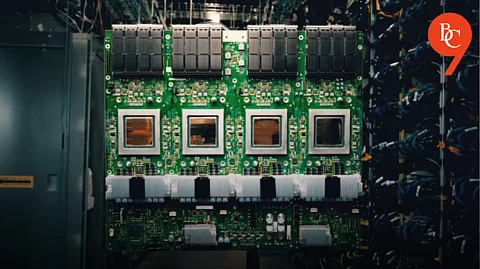

Google has unveiled Ironwood, its seventh-generation Tensor Processing Unit (TPU), at the Google Cloud Next 2025 conference. Purpose-built for AI inference, Ironwood represents a monumental leap in computational power, scalability, and energy efficiency, placing Google at the forefront of AI infrastructure innovation. With capabilities far surpassing the world's fastest supercomputer, Ironwood is set to redefine how large-scale AI models operate.

Ironwood offers 42.5 Exaflops of performance in its largest configuration (9,216 chips per pod), making it 24x more powerful than the El Capitan supercomputer, which delivers 1.7 Exaflops. Each individual chip boasts 4,614 TFLOPs, enabling ultra-fast processing for AI workloads across industries

Each chip includes 192 GB of High Bandwidth Memory (HBM)—six times more than its predecessor, Trillium—allowing faster processing of larger models and datasets. HBM bandwidth reaches 7.2 TBps per chip, ensuring rapid data access and reducing latency during memory-intensive tasks.

Ironwood delivers twice the performance per watt compared to Trillium, leveraging advanced liquid cooling solutions to sustain heavy workloads efficiently. It is nearly 30x more power-efficient than Google’s first Cloud TPU from 2018.

The TPU features an improved Inter-Chip Interconnect (ICI) bandwidth of 1.2 Tbps bidirectional, enabling faster communication between chips for distributed training and inference.

Ironwood ushers in what Google calls the “Age of Inference,” where AI models proactively generate insights and interpretations rather than merely responding to queries. It is optimized for thinking models, including Large Language Models (LLMs) and Mixture of Experts (MoEs), which require massive parallel processing and efficient memory access.